At Build 2023, we announced that Windows Terminal users will be able to take advantage of natural language AI to recommend commands, explain errors and take actions within Windows Terminal. Since then, we have been listening to customer feedback and iterating on our AI chat experiments in Windows Terminal.

The Windows Terminal team is committed to transparency, and we want to give the open-source community an opportunity to help us define what AI looks like in a terminal application.

As a result, we are open sourcing our work on Terminal Chat, our AI chat feature. Open sourcing this feature will allow developers to get a chance to try this experience and build it with us.

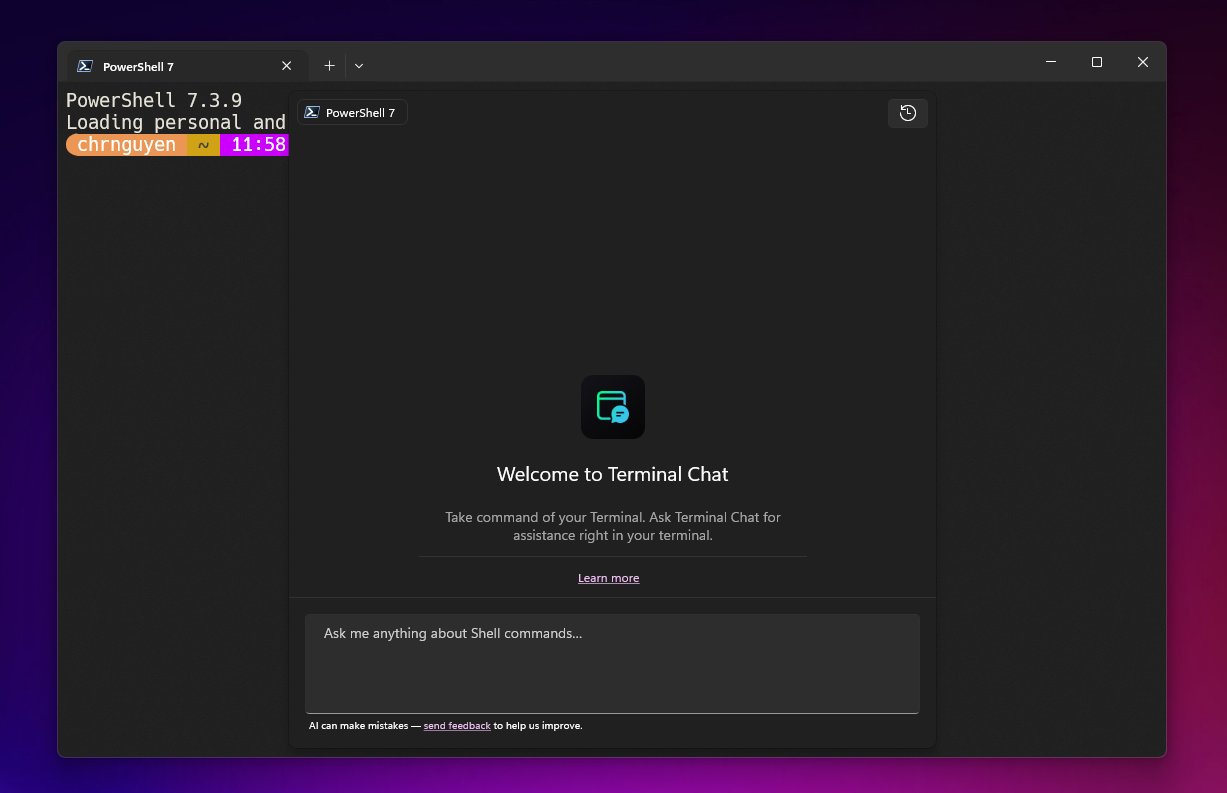

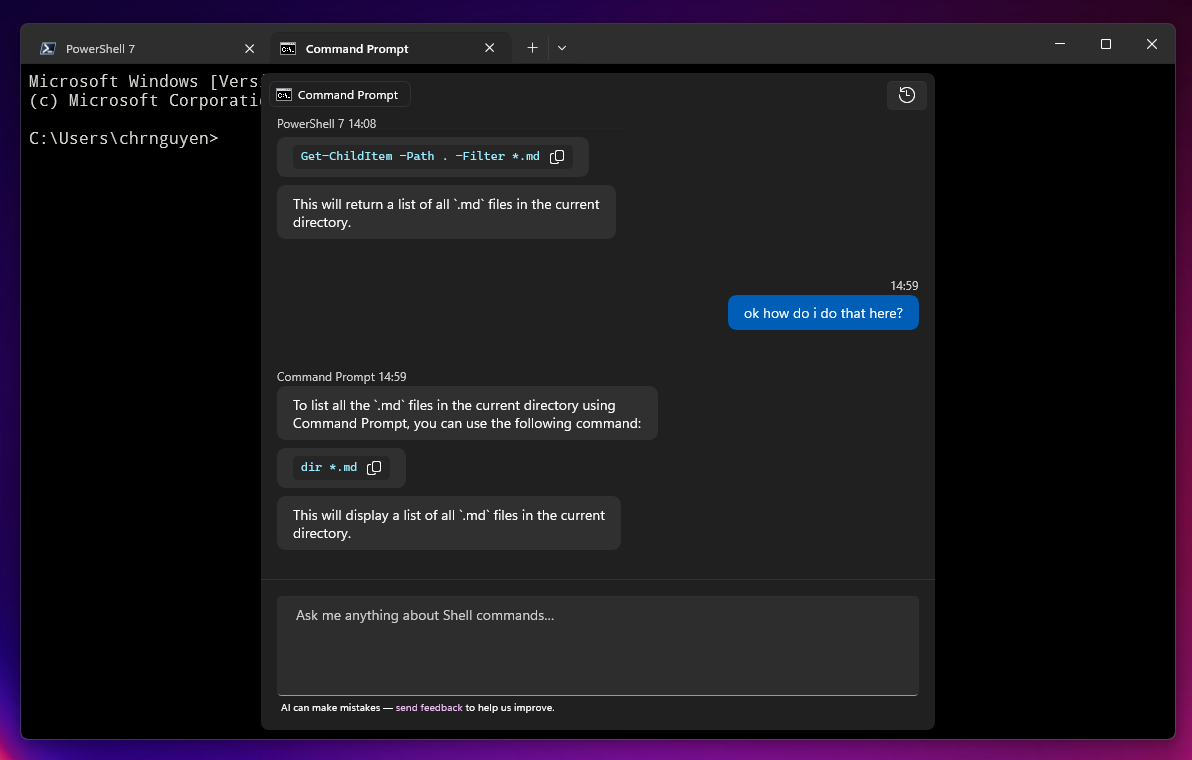

Terminal Chat is a feature in Windows Terminal Canary that allows the user to chat with an AI service to get intelligent suggestions (such as looking up a command or explaining an error message) while staying in the context of their terminal.

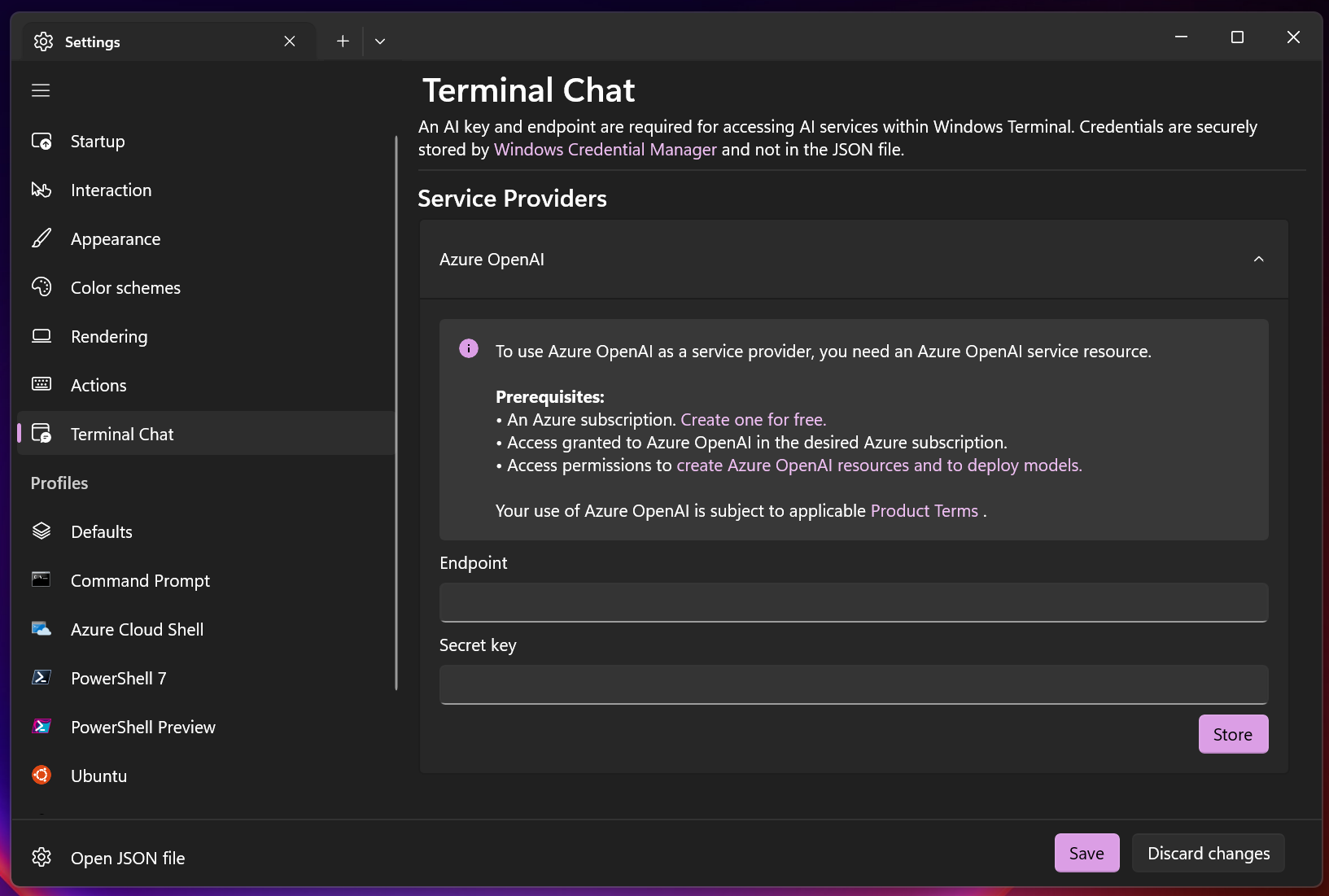

This feature does not ship with its own large-language model. For now, users will need to provide their Azure OpenAI Service endpoint and key to use Terminal Chat.

The code for Terminal Chat, our AI chat experience, can be found in the feature/llm branch of the Windows Terminal repository on GitHub. You can also download the latest build of Windows Terminal Canary from our GitHub repository as well.

Setting up Terminal Chat

Windows Terminal Canary does not ship with a default model or built-in AI model. To use Terminal Chat, you will need to add an AI service endpoint and key to the Terminal Chat settings of Windows Terminal Canary.

Terminal Chat only supports Azure OpenAI Service for now. To get an Azure OpenAI Service endpoint and key, you will need to create and deploy an Azure OpenAI Service resource.

Creating and Deploying an Azure OpenAI Service resource

To create and deploy an Azure OpenAI Service resource, please follow the official Azure OpenAI documentation on creating and deploying an Azure OpenAI Service resource.

In that documentation, you will learn how to:

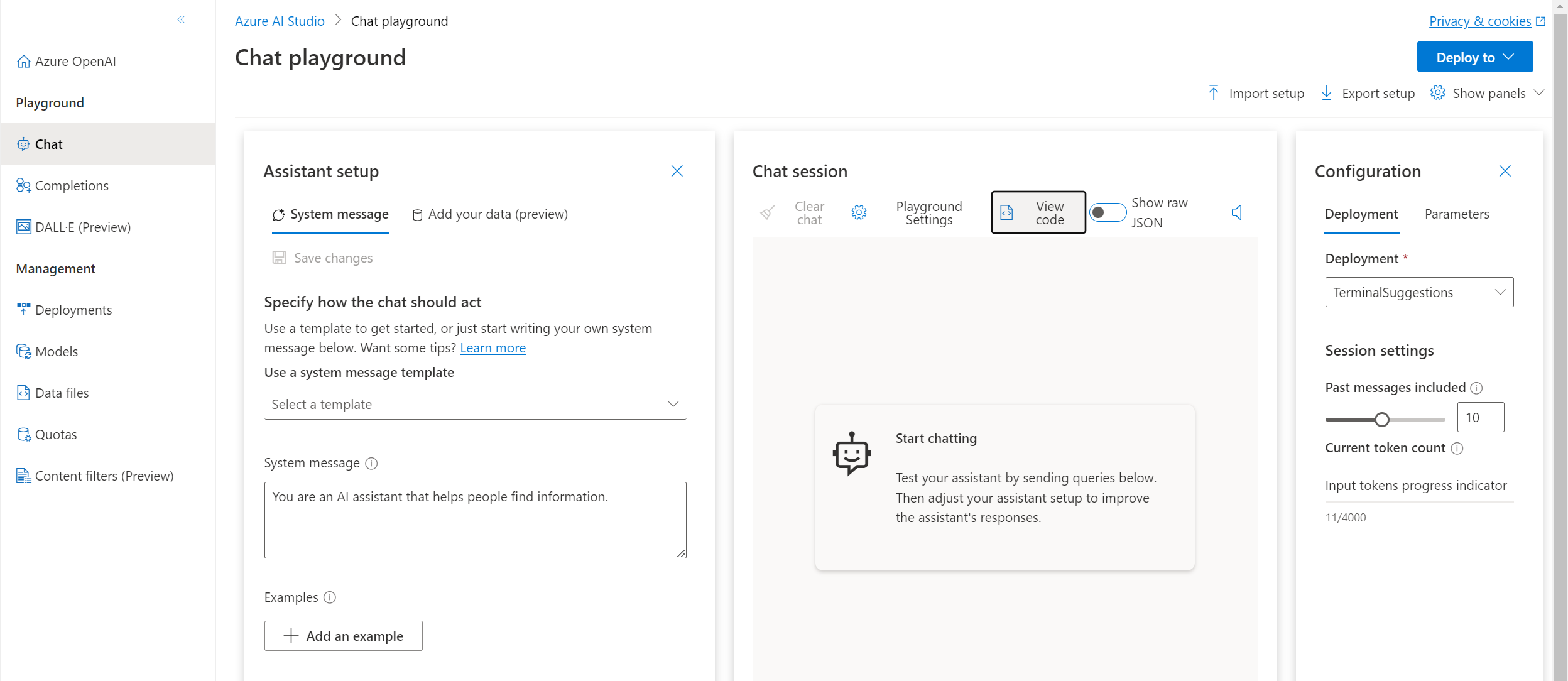

You will need to use a gpt-35-turbo model with your deployment.

After creating a resource and deploying a model, you can find your Azure OpenAI Service endpoint and key by navigating to the Chat playground in Azure OpenAI Studio and selecting View code in the Chat session section.

The View code pop-up dialog will show you a valid Azure OpenAI Service endpoint and key that you can use for Terminal Chat.

Using Terminal Chat

After entering your AI service endpoint and key in the Terminal Chat settings, select Store and Save to store and save those values. This will allow you to use Terminal Chat with the AI service that is affiliated with your service endpoint.

Clicking on the suggestion will copy it to the input line of your terminal. Terminal Chat will not run the suggestion automatically for you– This way, you will have time to reason over the command before executing it 🙂

Terminal Chat also takes the name of the active shell that you are using and sends that name as additional context to the AI service. This allows us to get answers that are more tailored towards the shell that we are currently using– Because as we all know, listing files in a directory is a lot different in Command Prompt than it is in PowerShell 😉

Windows Terminal Canary only communicates with an AI service when the user sends a message. The chat history and name of the user’s active shell is also appended to the message that is sent to the AI service. The chat history is not saved by Windows Terminal Canary after their terminal session is over.

Tips & Tricks

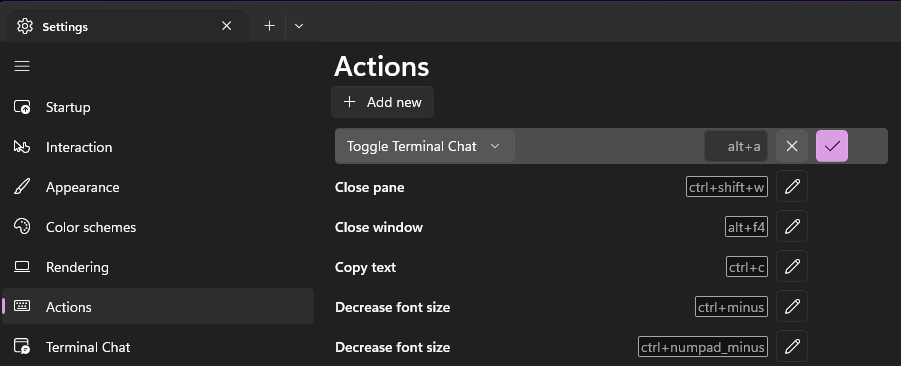

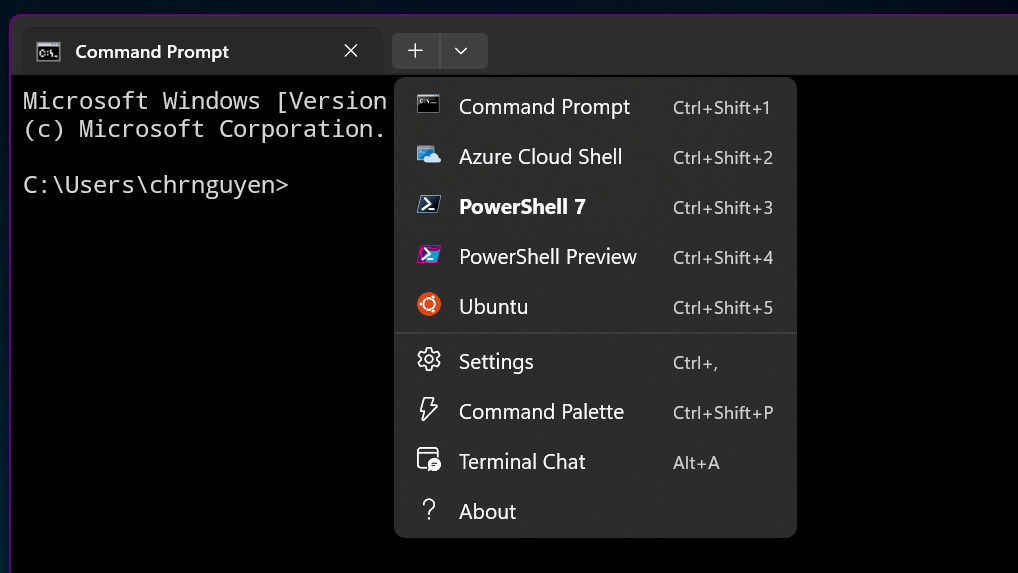

You can set Terminal Chat as a keybinding Action to invoke the chat pane without having to click on “Terminal Chat” from the dropdown menu. To do this, you will need to go to Settings then Actions.

Select + Add new and then pick Toggle Terminal Chat from the dropdown to add a new keybinding Action for the Terminal Chat feature. I used Alt + a for my keybinding Action, but feel free to use any keybinding you want! 🙂

Your new keybinding will also be reflected in the dropdown menu.

Let’s build together!

Windows Terminal exists to push the boundaries of what a terminal emulator can do, however, we understand that that “AI in a terminal” can sound daunting. We are committed to transparency and listening to user feedback.

We strongly believe that the inclusion of the open-source community will help us define our AI roadmap and help us identify the minimal loveable AI feature set that can go into our core product, Windows Terminal. For now, the Terminal Chat feature will only be available in Windows Terminal Canary. It will not be included in builds of Windows Terminal Preview or Windows Terminal stable.

If you are interested in the AI experiences that we are building for Windows Terminal, then please check out the feature/llm branch of the Windows Terminal repository or download the latest build of Windows Terminal Canary.

If you have a feature request or found a bug in Windows Terminal Canary, then please submit a new Issue on our GitHub repository. Send us feedback! Help us grow! And let’s build together!

Is the user responsible for the costs of the Azure service?

I love the comments on this article – mostly “this comment has been deleted”, two identical “This looks awesome!” from two different people, one spam comment and one comment calling out an unrelated bug report.

Please tell us exactly why anyone would entrust a machine learning/artificial "intelligence" model (really just a computer trying to make sense out of a bunch of random words scraped from different websites using a predefined prompt present in every API callback made to OpenAI's ChatGPT bot) for a command-line-based user interface. This will become especially useless under most hobbyist and enterprise scenarios, and it's going to fall VERY short when dealing with specific cmdlets or...

This is incredible, i looking forward to ise it. AsWeb designer and programming this make my job way easier.

Very interesting experience.

However, the replies in terminal chat always break lines at inappropriate places. If this problem can be solved, this project will become better and more efficient.

Hey wulu! I’m curious about the line breaks you are seeing. Can you open up a new issue on https://github.com/microsoft/terminal/issues and send us some screenshots? I’d love to know more! 🙂

since I am facing the following issue

https://github.com/microsoft/terminal/issues/6901#issuecomment-1307781312

this news makes me giggle, terminal window can’t properly handle line breaks, but at same time integrates a LLM

so fails at such a basic feature, but should master such an advanced feature 🙂

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.

This looks awesome!

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.

This looks awesome!

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.

this comment has been deleted.