-

Notifications

You must be signed in to change notification settings - Fork 3.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Ambient Occlusion doesn't work! #10106

Comments

|

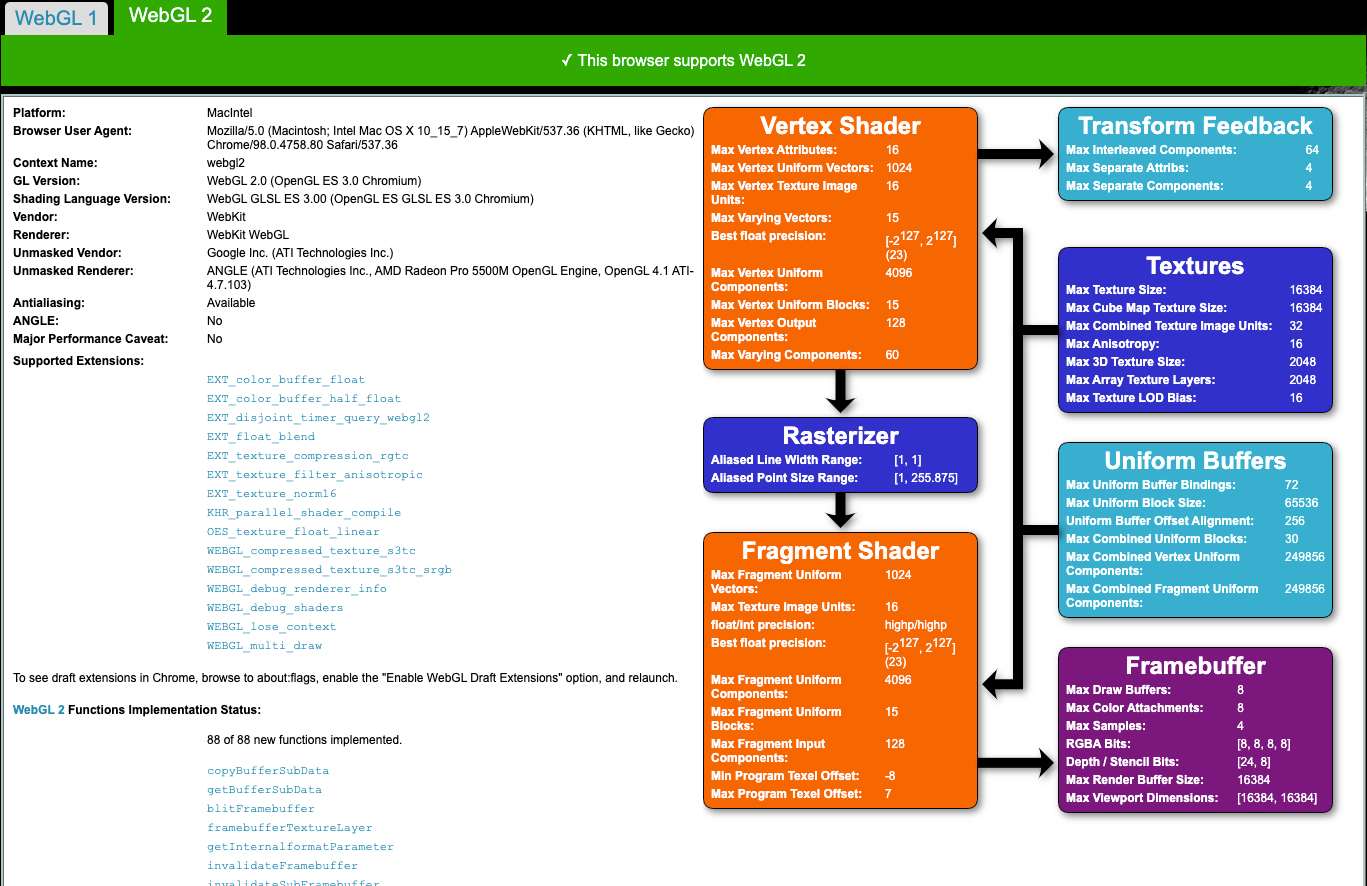

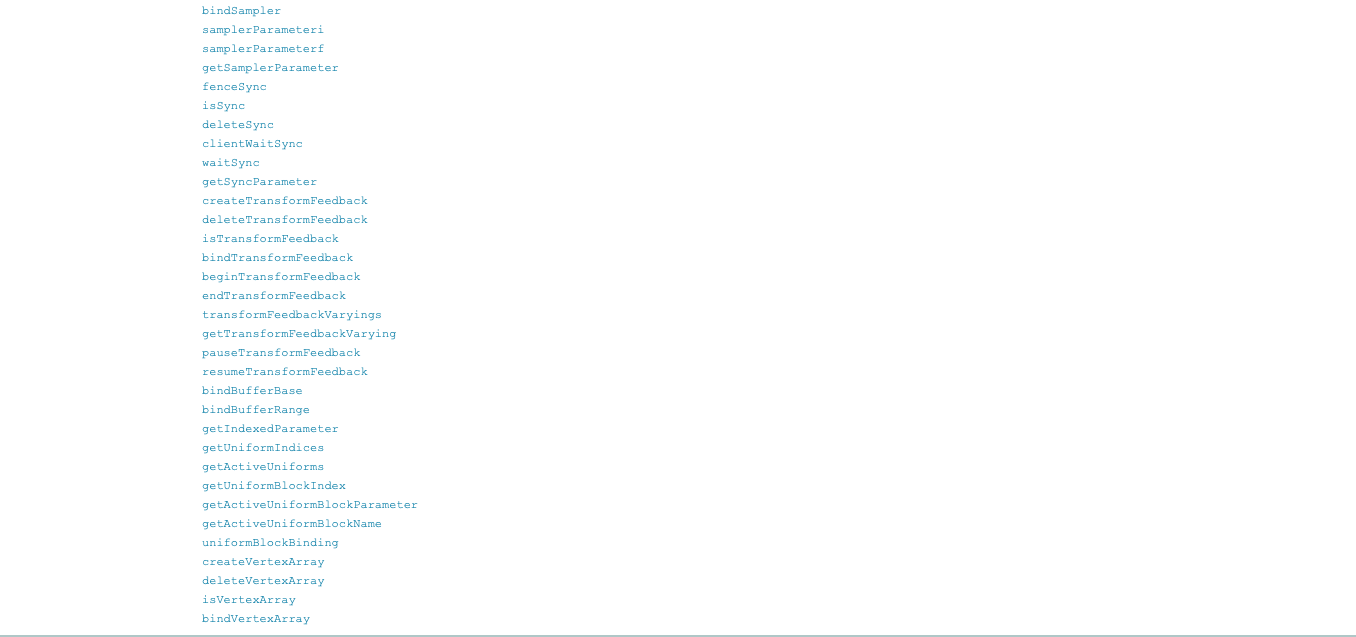

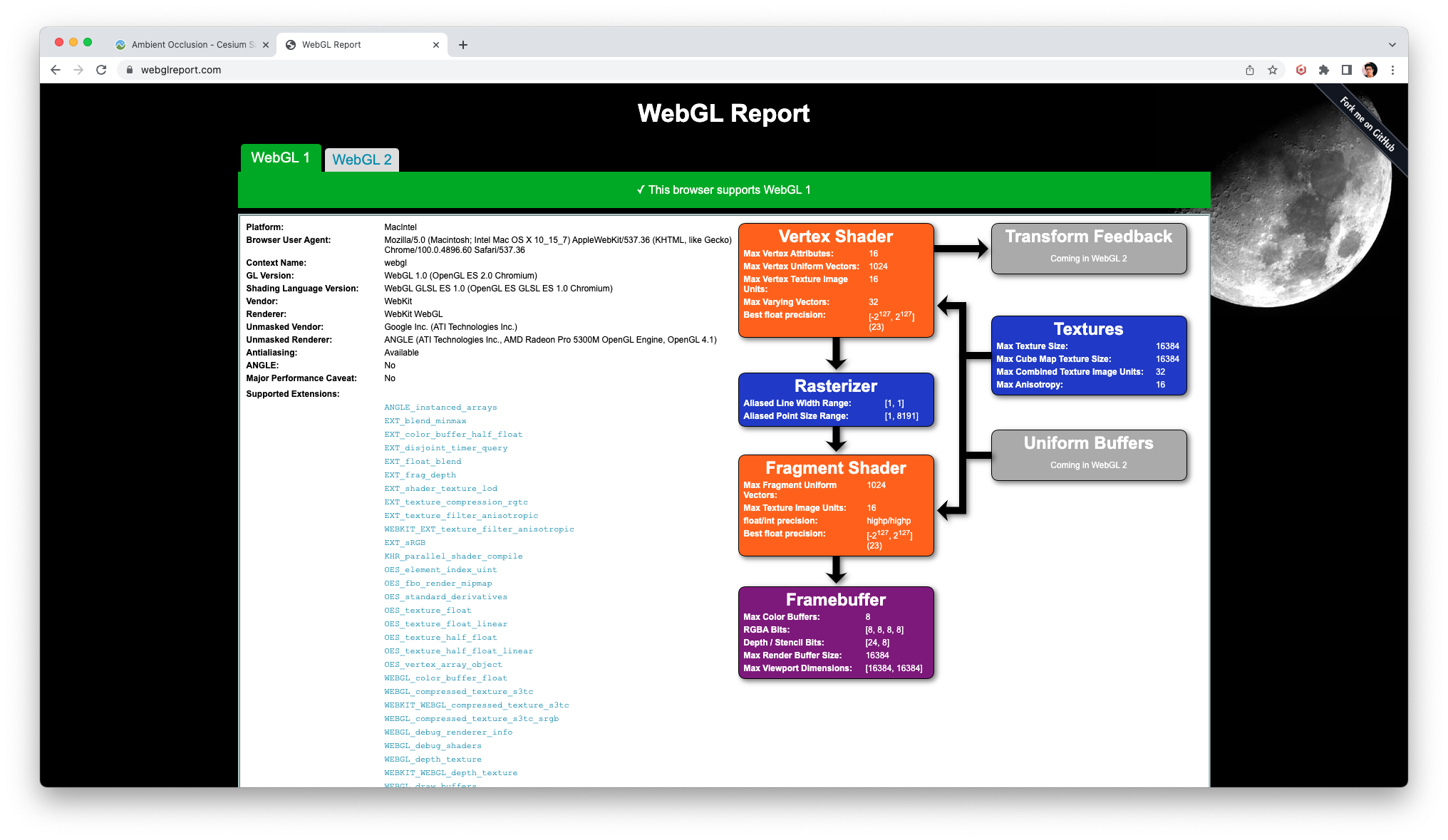

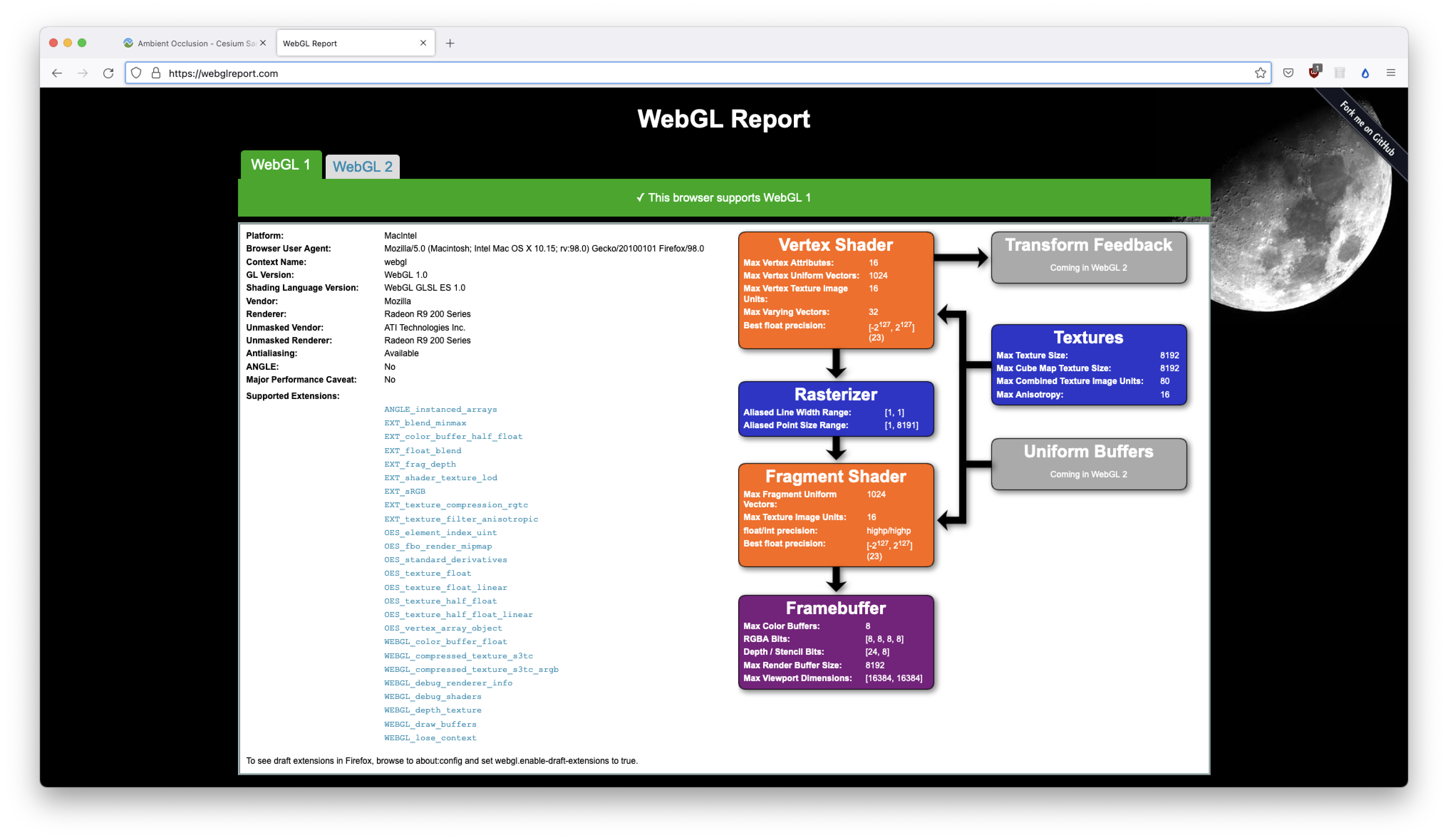

@happyfeet1996 I'm unable to reproduce on MacOS in Chrome. Can you provide some more details about your system by visiting this page and taking a screenshot? |

|

|

it works today,maybe my network go wrong that day |

|

I also reproduce the same issue on macOS Chrome with eGPU. Specifying fragment alpha in AmbientOcclusionModulate seems to resolve it. - out_FragColor.rgb = ambientOcclusionOnly ? ao : ao * color;

+ out_FragColor = vec4(ambientOcclusionOnly ? ao : ao * color, 1.0); |

|

Thanks for the info @shotamatsuda. @jjhembd Could you please verify the potential fix in the comment above, and recommend a PR if appropriate? |

|

Hi @shotamatsuda, thanks for the tip. However, on my machine (Chrome on Windows), I get the same image even if I set the alpha to 0.0 out_FragColor.rgb = ambientOcclusionOnly ? ao : ao * color;

out_FragColor.a = 0.0;Does zero alpha also work on your machine, or is the |

|

@jjhembd In my specific hardware, setting the alpha to zero results in a black screen, as well as not setting the value at all. The importance of This suggests to me that the blending function might not be defined, leading to a hardware or driver-dependent issue. Specifying the alpha value explicitly compensates for it. |

|

For the record, my setup is macOS 13.3.1, Chrome 112.0.5615.49, and AMD Radeon RX 6800 XT GPU. I encountered the issue before Chrome 100 on macOS Monterey with the same eGPU, and it doesn't reproduce in Safari or Firefox. I first noticed the issue around Cesium 1.89.0, but I'm unsure if it is caused by the Chrome version update. |

|

Hi @shotamatsuda, thanks again for all the info. I think it was a mistake in There are two input textures in

vec4 color = texture(colorTexture, v_textureCoordinates);

vec4 ao = texture(ambientOcclusionTexture, v_textureCoordinates);

out_FragColor = ambientOcclusionOnly ? ao : ao * color;The above code gives the same result as before on my setup. Can you please verify if it works on your hardware? If so, we should be ready for a PR. |

|

@jjhembd Yes, I confirmed it works with my hardware, and that should solve OP's issue. Deriving alpha from |

|

Requested on the forum: https://community.cesium.com/t/improve-ambient-occlusion/9639/4 |

|

Major problem is in the quality of stochatic noise and the lack of denoise. HBAO with cross-bilateral filter: |

|

Awesome demo @shotamatsuda! Would you have any interest in contributing this AO algorithm implementation? |

|

CC #11067? |

|

I hope AO will have better effects and performance. It is a good way to improve the effect of Cesium models. At present, it needs to be optimized in terms of performance and effects. Thank you for your efforts. |

|

As discussed offline, the depth buffer for post-processing has a precision problem. To address this, we will try the following (in order):

|

|

Here is an updated Sandcastle displaying the gradient of the depth buffer. Log depth shows strong (flickering) noise in the distance, as well as some spuriously large gradients on smooth surfaces nearer the camera. Linear depth reduces the noise nearer the camera. However, only the frustum nearest the camera is valid. The back frustum is washed out. |

|

I updated the depth buffer to use See the |

|

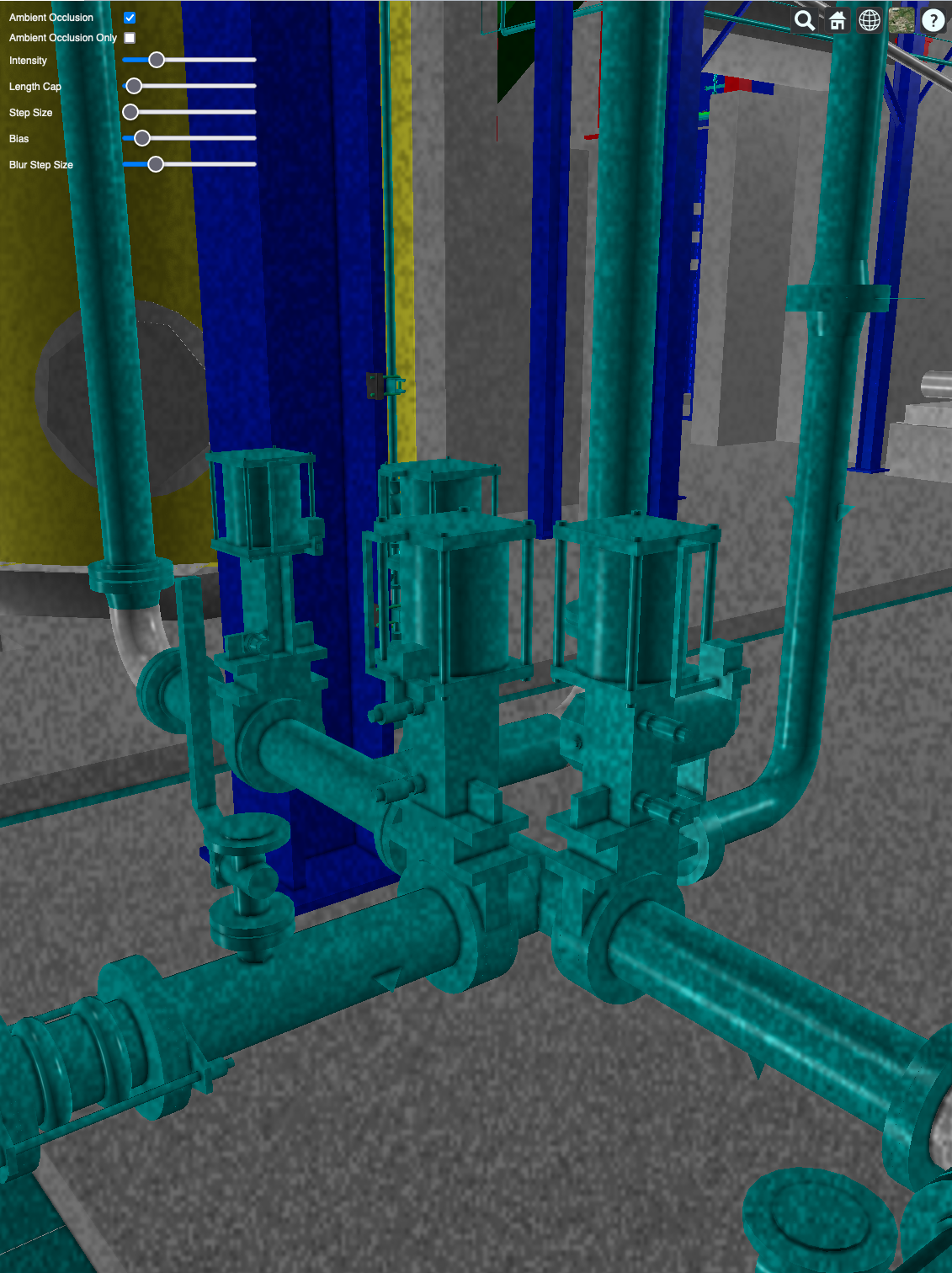

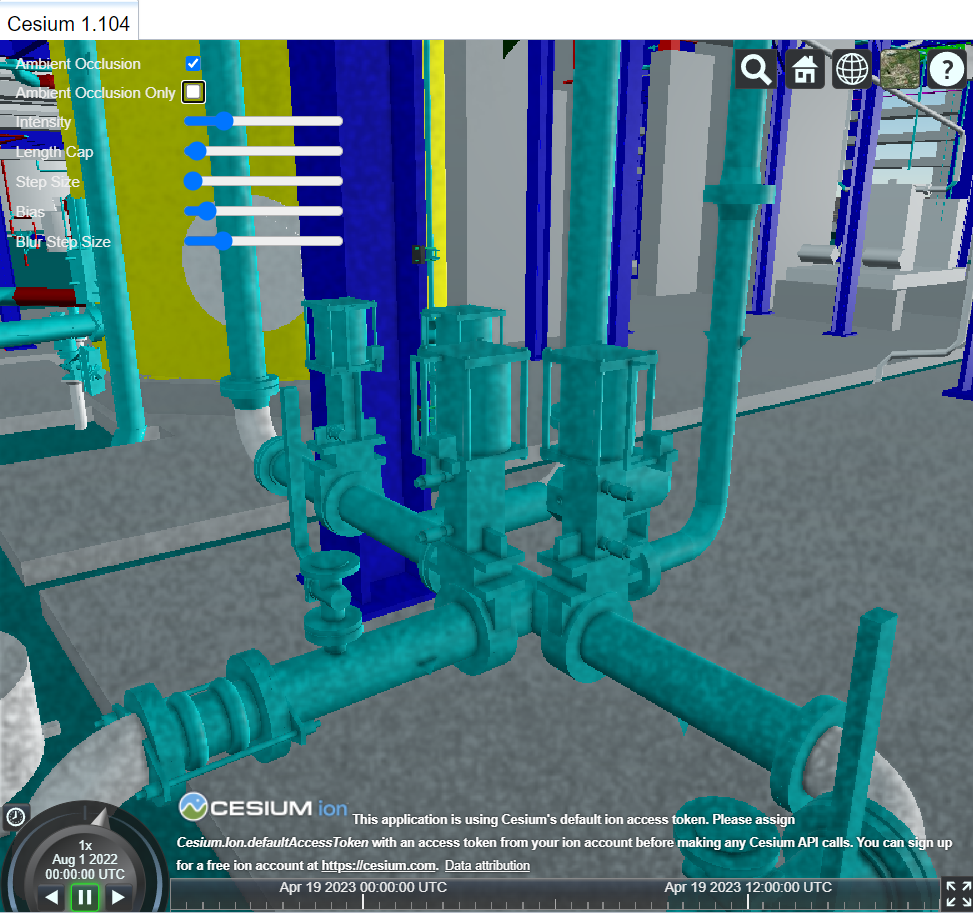

@ggetz highlighted this commit, before which AO did not show a noisy background on flat surfaces. Here is a quick screenshot of AO from a prior commit (70fc40e): For comparison, here is a screenshot from the current And here is the result using the While some minor artifacts are reduced by the I also captured the gradient of the depth buffer prior to #9966. It does not look significantly different from the depth buffer from the current main branch. The background noise problem appears to be something more fundamental--perhaps a depth buffer is being overwritten somewhere? |

|

The background noise is generated when the HBAO stepping is along a direction not aligned with screen X/Y. Here is the AO output with stepping only along X/Y. This is achieved by overriding the "randomVal" texture and using a constant rotation of 0 at every sample. Note the significant reduction in the background on flat surfaces. The remaining background is the result of the noise in the depth buffer. It is reduced by setting the near plane to 1m (instead of 0.1m): This is already approaching the result before #9966. One possible explanation is that before #9966, we had 2 bugs canceling each other:

Then #9966 fixed the first bug, exposing the second bug. But this is speculation--actually tracing texture handling through the major refactor in #9966 would take significant time. Here is the result using a constant rotation of Note the (incorrect) non-zero values on every flat surface. The current noisy result in |

|

A remarkably similar-looking bug, which appears to be related to incorrect normals. In this case, I think world-space geometry was leaking into the normal calculation. |

|

There were some incorrect geometry assumptions in our shader. For example, //Reconstruct Normal Without Edge Removation

vec3 getNormalXEdge(vec3 posInCamera, float depthU, float depthD, float depthL, float depthR, vec2 pixelSize)

{

vec4 posInCameraUp = clipToEye(v_textureCoordinates - vec2(0.0, pixelSize.y), depthU);

vec4 posInCameraDown = clipToEye(v_textureCoordinates + vec2(0.0, pixelSize.y), depthD);Based on what the code is doing, the comment should actually read something like this: // Reconstruct normal with edge removalBut this line: vec4 posInCameraUp = clipToEye(v_textureCoordinates - vec2(0.0, pixelSize.y), depthU);appears to assume Overall, I think our current prototype AO is pretty rough. The choice of algorithm is good, it just needs a rewrite, with careful checking of the input and output of each step. We now know the depth buffer input is somewhat problematic, but:

|

|

Some notes from an offline discussion: The error along rotated axes is much larger than the depth buffer error. It is not clear whether:

We will spend a little time reworking and testing each step of the AO implementation, under the preliminary assumption that the depth buffer is not the main problem. Initial tests/QCs will include:

|

I tried to confirm this, but can't. Before that PR, the result looks fine even with a constant rotation of |

|

I found what changed in #9966: the depth stencil texture was changed from linear to nearest sampling. We can recreate the old result in the current code by making 2 changes:

Current result with a WebGL2 context: Current main with a WebGL1 context, nearest sampling. Note how some of the artifacts are reduced. Current main with WebGL1 context, and linear sampling of the depth texture. Just for interest: here's the linear sampling result with more angular sampling (16 instead of 4) |

|

I don't think we want to require WebGL1 for AO. Two possible options:

One thing that is not clear to me yet: why does log depth produce fewer artifacts in WebGL1 than in WebGL2? |

I agree. Is doing linear interpolation in the shader a possibility? @lilleyse Would you be able to weigh in on the solution here? |

|

I'm surprised linear sampling looks that much better. Is it because coordinates aren't snapped? |

Correct, the current shader does not snap coordinates to the texture grid. I am trying a snapping solution now.

Yes, though this would add to the cost. I think the main performance hit right now is the texture access, and a manually-coded linear interpolation would make that 4x. Snapping would be faster, if we can correctly account for the snapped geometry everywhere. |

|

We now have 4 actionable steps to improve AO:

Other issues arising:

|

|

Item 1 above is addressed by #12201. At each output pixel, we currently step rays outward along several angles to find the angle of the "horizon". The maximum distance of the ray-stepping defines the distance around the point where we allow nearby geometry to "occlude" the ambient light. We currently have 3 factors defining this maximum occlusion distance:

The main limitation of this approach is that parameters that work at one scale do not work when zooming out or in. For example, when zoomed on some details of the Power Plant model, an occlusion radius of less than 1m makes sense. But when zooming out to city scale, we might reasonably expect a skyscraper or other large building to occlude ambient light on buildings 10m or more away. I first tried a naive approach: scale the occlusion radius based on distance from the camera. However, this makes a given feature become darker overall as it moves away from the camera, since the shadowed areas maintain their darkness, but their size grows linearly with distance. One better approach: model the occlusion as something that blurs with distance--i.e., shadowed areas become wider, but less dark. In this way the overall darkness of a given feature would remain constant. We can achieve a blurring effect by simplifying the flow as follows:

We do not need to abort the stepping if a given sample point is too far away from the output point, because the Gaussian weighting will already make the contribution from that point negligible. |

|

I have a preliminary version of the Gaussian-weighted AO in the Here is a quick comparison on the NYC buildings tileset. Some notes:

|

|

@jjhembd Screenshot look fantastic! I don't see the |

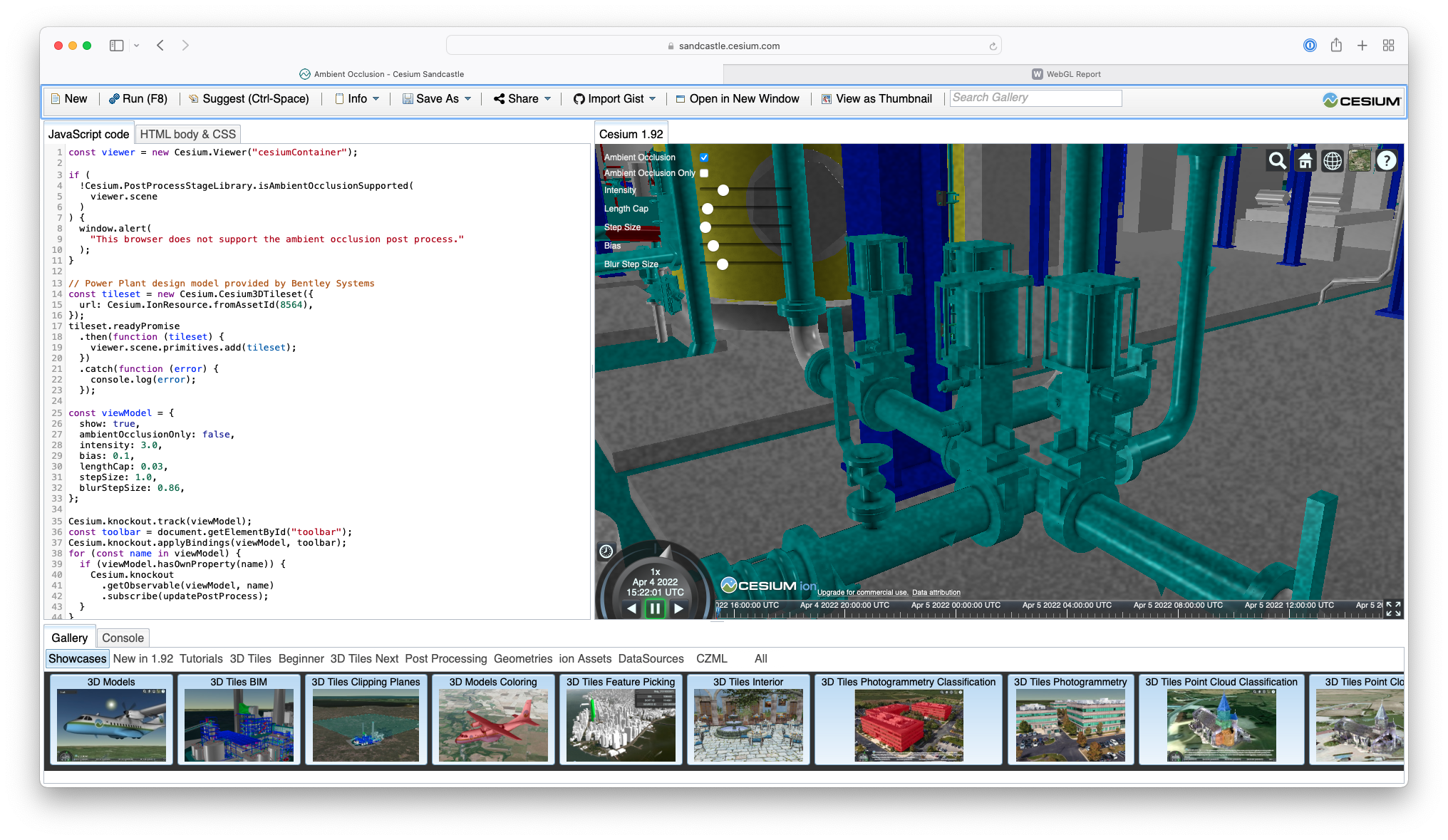

Sandcastle example:Ambient Occlusion

Browser:Chrome

Operating System: macos

when i check ambient occlusion,full screen change to black and can not look anything!

The text was updated successfully, but these errors were encountered: