-

Notifications

You must be signed in to change notification settings - Fork 514

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

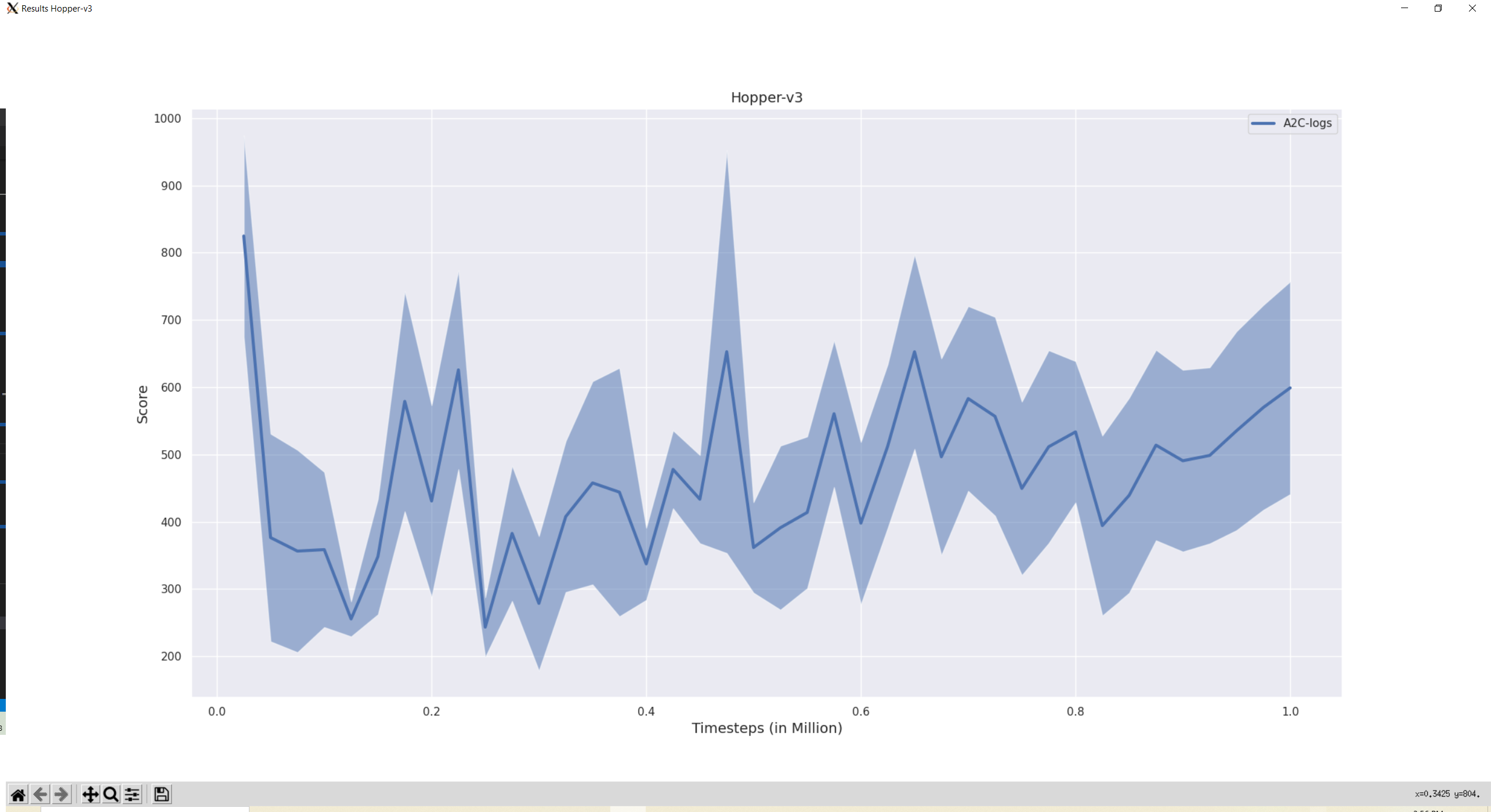

[Question] The performance for Hopper-v3 doesn't get converged for PPO #376

Comments

|

Sorry, what more information is needed? |

|

This is a fairly common result of ppo, here is one thread among many that discusses it: You can try to decrease the cliping parameter and early stopping the experiment. |

Thank you for your suggestions. I will try them. Based on the experiment results I got, there are another two spot points:

In this case, can I regard it as an issue in dealing with Hopper-v3 instead of an issue in PPO? |

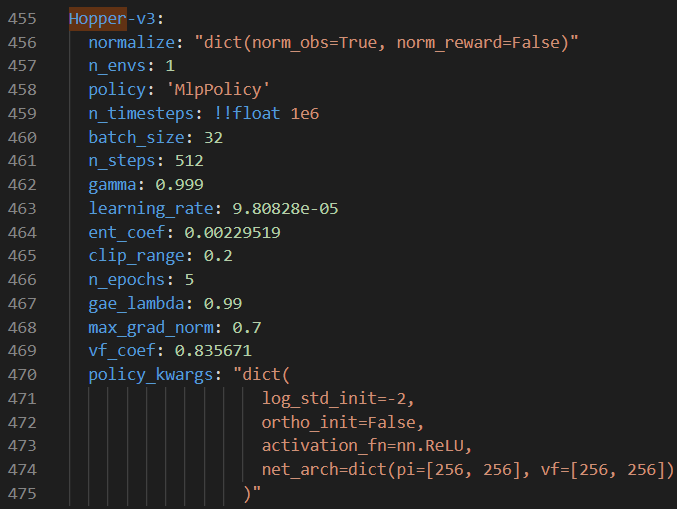

The hyperparameters used and your system/lib information (os, gym version, mujoco version, sb3 version, ...) |

❓ Question

Hi there,

I ran into such an issue when I trained an agent using PPO in Hopper-v3. Here is the performance for 5 seeds by running: python3 scripts/all_plots.py -a ppo --env Hopper-v3 -f logs/downloaded

The commands are like: python train.py --algo ppo --env Hopper-v3 --seed 500X;

The seeds are from 5000 to 5004 and the default hyper-parameters are used. It always converges to 1K quickly, dramatically decreases to under 100, and then converges to 1K, ... .

I only encountered such an issue for Hopper-v3 (A2C suffers as well). It works well for other environments.

Is there anything I did wrong? Any help is appreciated!

Checklist

The text was updated successfully, but these errors were encountered: